grab-site

grab-site is an easy preconfigured web crawler designed for backing up websites. Give grab-site a URL and it will recursively crawl the site and write WARC files. Internally, grab-site uses wpull for crawling.

grab-site gives you

-

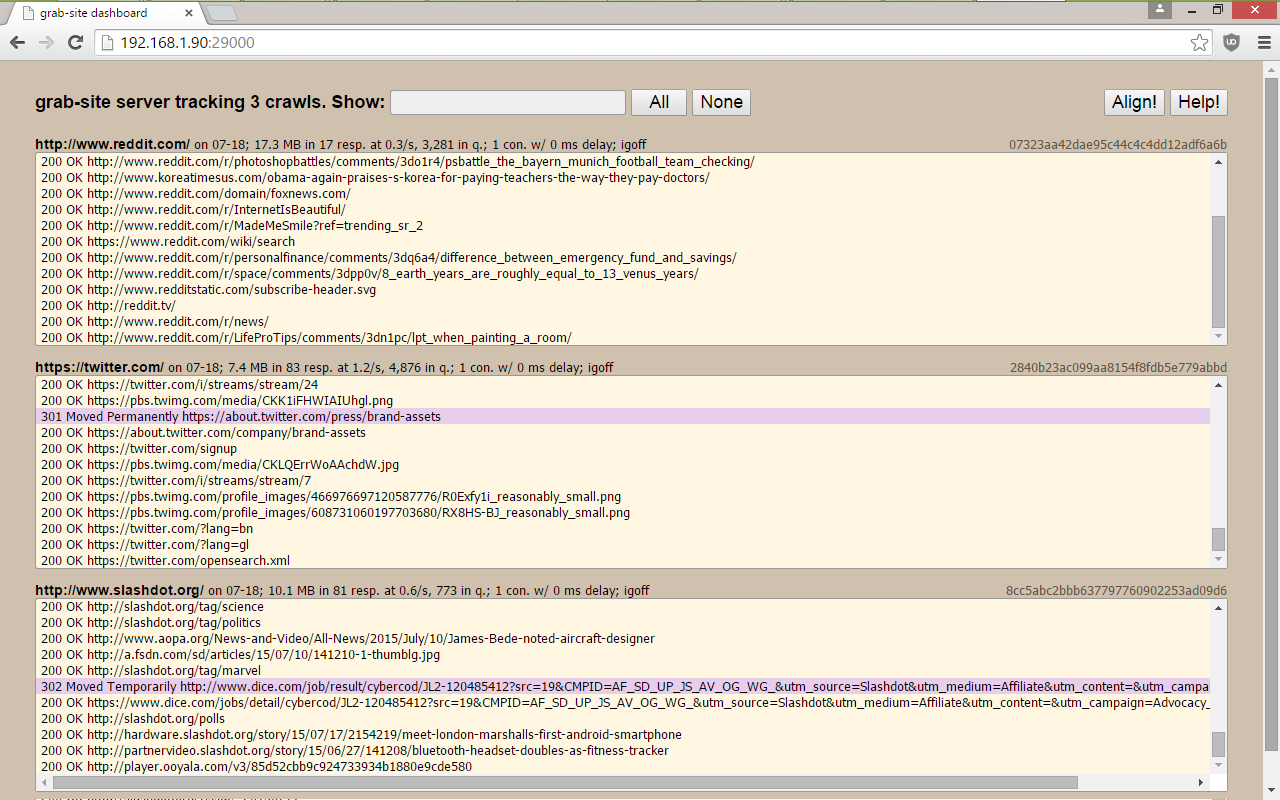

a dashboard with all of your crawls, showing which URLs are being grabbed, how many URLs are left in the queue, and more.

-

the ability to add ignore patterns when the crawl is already running. This allows you to skip the crawling of junk URLs that would otherwise prevent your crawl from ever finishing. See below.

-

an extensively tested default ignore set (global) as well as additional (optional) ignore sets for forums, reddit, etc.

-

duplicate page detection: links are not followed on pages whose content duplicates an already-seen page.

The URL queue is kept on disk instead of in memory. If you're really lucky, grab-site will manage to crawl a site with ~10M pages.

Note: grab-site currently does not work with Python 3.5; please use Python 3.4 instead.

Contents

- Install on Ubuntu

- Install on OS X

- Upgrade an existing install

- Usage

- Changing ignores during the crawl

- Inspecting the URL queue

- Grabbing a site that requires a cookie

- Stopping a crawl

- Advanced

gs-serveroptions - Viewing the content in your WARC archives

- Inspecting WARC files in the terminal

- Thanks

- Help

Install on Ubuntu

On Ubuntu 14.04.1 or newer:

sudo apt-get install --no-install-recommends git build-essential python3-dev python3-pip

pip3 install --user git+https://github.com/ludios/grab-site

To avoid having to type out ~/.local/bin/ below, add this to your

~/.bashrc or ~/.zshrc:

PATH="$PATH:$HOME/.local/bin"

Install on OS X

On OS X 10.10:

-

If xcode is not already installed, type

gccin Terminal; you will be prompted to install the command-line developer tools. Click 'Install'. -

If Python 3 is not already installed, install Python 3.4.3 using the installer from https://www.python.org/downloads/release/python-343/

-

pip3 install --user git+https://github.com/ludios/grab-site

Important usage note: Use ~/Library/Python/3.4/bin/ instead of

~/.local/bin/ for all instructions below!

To avoid having to type out ~/Library/Python/3.4/bin/ below,

add this to your ~/.bash_profile (which may not exist yet):

PATH="$PATH:$HOME/Library/Python/3.4/bin"

Upgrade an existing install

To update to the latest grab-site, simply run pip3 install again:

pip3 install --user git+https://github.com/ludios/grab-site

To upgrade all of grab-site's dependencies, add the --upgrade option (not advised unless you are having problems).

Usage

First, start the dashboard with:

~/.local/bin/gs-server

and point your browser to http://127.0.0.1:29000/

Then, start as many crawls as you want with:

~/.local/bin/grab-site URL

Do this inside tmux unless they're very short crawls.

grab-site outputs WARCs, logs, and control files to a new subdirectory in the

directory from which you launched grab-site, referred to here as "DIR".

(Use ls -lrt to find it.)

Options, ordered by importance

Options can come before or after the URL.

-

--1: grab justURLand its page requisites, without recursing. -

--igsets=IGSET1,IGSET2: use ignore setsIGSET1andIGSET2.Ignore sets are used to avoid requesting junk URLs using a pre-made set of regular expressions.

forumsis a frequently-used ignore set for archiving forums. See the full list of available ignore sets.The global ignore set is implied and always enabled.

The ignore sets can be changed during the crawl by editing the

DIR/igsetsfile. -

--no-offsite-links: avoid following links to a depth of 1 on other domains.grab-site always grabs page requisites (e.g. inline images and stylesheets), even if they are on other domains. By default, grab-site also grabs linked pages to a depth of 1 on other domains. To turn off this behavior, use

--no-offsite-links.Using

--no-offsite-linksmay prevent all kinds of useful images, video, audio, downloads, etc from being grabbed, because these are often hosted on a CDN or subdomain, and thus would otherwise not be included in the recursive crawl. -

-i/--input-file: Load list of URLs-to-grab from a local file or from a URL; likewget -i. File must be a newline-delimited list of URLs. Combine with--1to avoid a recursive crawl on each URL. -

--igon: Print all URLs being ignored to the terminal and dashboard. Can be changed during the crawl bytouching orrming theDIR/igofffile. -

--no-video: Skip the download of videos by both mime type and file extension. Skipped videos are logged toDIR/skipped_videos. Can be changed during the crawl bytouching orrming theDIR/videofile. -

--no-sitemaps: don't queue URLs fromsitemap.xmlat the root of the site. -

--max-content-length=N: Skip the download of any response that claims a Content-Length larger thanN. (default: -1, don't skip anything). Skipped URLs are logged toDIR/skipped_max_content_length. Can be changed during the crawl by editing theDIR/max_content_lengthfile. -

--no-dupespotter: Disable dupespotter, a plugin that skips the extraction of links from pages that look like duplicates of earlier pages. Disable this for sites that are directory listings, because they frequently trigger false positives. -

--concurrency=N: UseNconnections to fetch in parallel (default: 2). Can be changed during the crawl by editing theDIR/concurrencyfile. -

--delay=N: WaitNmilliseconds (default: 0) between requests on each concurrent fetcher. Can be a range like X-Y to use a random delay between X and Y. Can be changed during the crawl by editing theDIR/delayfile. -

--warc-max-size=BYTES: Try to limit each WARC file to aroundBYTESbytes before rolling over to a new WARC file (default: 5368709120, which is 5GiB). Note that the resulting WARC files may be drastically larger if there are very large responses. -

--level=N: recurseNlevels instead ofinflevels. -

--page-requisites-level=N: recurse page requisitesNlevels instead of5levels. -

--ua=STRING: Send User-Agent:STRINGinstead of pretending to be Firefox on Windows. -

--wpull-args=ARGS: String containing additional arguments to pass to wpull; see~/.local/bin/wpull --help.ARGSis split withshlex.splitand individual arguments can contain spaces if quoted, e.g.--wpull-args="--youtube-dl \"--youtube-dl-exe=/My Documents/youtube-dl\""Also useful:

--wpull-args=--no-skip-getaddrinfoto respect/etc/hostsentries. -

--help: print help text.

Changing ignores during the crawl

While the crawl is running, you can edit DIR/ignores and DIR/igsets; the

changes will be applied within a few seconds.

DIR/igsets is a comma-separated list of ignore sets to use.

DIR/ignores is a newline-separated list of Python 3 regular expressions

to use in addition to the ignore sets.

You can rm DIR/igoff to display all URLs that are being filtered out

by the ignores, and touch DIR/igoff to turn it back off.

Inspecting the URL queue

Inspecting the URL queue is usually not necessary, but may be helpful for adding ignores before grab-site crawls a large number of junk URLs.

To dump the queue, run:

~/.local/bin/gs-dump-urls DIR/wpull.db todo

Four other statuses can be used besides todo:

done, error, in_progress, and skipped.

You may want to pipe the output to sort and less:

~/.local/bin/gs-dump-urls DIR/wpull.db todo | sort | less -S

Grabbing a site that requires a cookie

- Log into the site in Chrome.

- Open the developer tools with F12.

- Switch to the Network tab of the developer tools.

- Hit F5 to reload the page. The developer tools will stay open and capture the HTTP requests.

- Scroll up in the list of network events and click on the first request.

- In the right pane, click the Headers tab.

- Scroll down to the Request Headers section.

- Copy the Cookie: value.

- Start grab-site with:

grab-site --wpull-args="--header=\"Cookie: COOKIE_VALUE\"" URL

Note: do not use document.cookie in the developer tools Console because it does not include HttpOnly cookies.

Stopping a crawl

You can touch DIR/stop or press ctrl-c, which will do the same. You will

have to wait for the current downloads to finish.

Advanced gs-server options

These environmental variables control what gs-server listens on:

GRAB_SITE_HTTP_INTERFACE(default 0.0.0.0)GRAB_SITE_HTTP_PORT(default 29000)GRAB_SITE_WS_INTERFACE(default 0.0.0.0)GRAB_SITE_WS_PORT(default 29001)

GRAB_SITE_WS_PORT should be 1 port higher than GRAB_SITE_HTTP_PORT,

or else you will have to add ?host=WS_HOST:WS_PORT to your dashboard URL.

These environmental variables control which server each grab-site process connects to:

GRAB_SITE_WS_HOST(default 127.0.0.1)GRAB_SITE_WS_PORT(default 29001)

Viewing the content in your WARC archives

You can use ikreymer/webarchiveplayer

to view the content inside your WARC archives. It requires Python 2, so install it with

pip instead of pip3:

sudo apt-get install --no-install-recommends git build-essential python-dev python-pip

pip install --user git+https://github.com/ikreymer/webarchiveplayer

And use it with:

~/.local/bin/webarchiveplayer <path to WARC>

then point your browser to http://127.0.0.1:8090/

Inspecting WARC files in the terminal

zless is a wrapper over less that can be used to view raw WARC content:

zless DIR/FILE.warc.gz

zless -S will turn off line wrapping.

Note that grab-site requests uncompressed HTTP responses to avoid double-compression in .warc.gz files and to make zless output more useful. However, some servers send compressed responses anyway.

Thanks

grab-site is made possible only because of wpull, written by Christopher Foo who spent a year making something much better than wget. ArchiveTeam's most pressing issue with wget at the time was that it kept the entire URL queue in memory instead of on disk. wpull has many other advantages over wget, including better link extraction and Python hooks.

Thanks to David Yip, who created ArchiveBot. The wpull hooks in ArchiveBot served as the basis for grab-site. The original ArchiveBot dashboard inspired the newer dashboard now used in both projects.

Help

grab-site bugs, discussion, ideas are welcome in grab-site/issues. If you are affected by an existing issue, please +1 it.

If a problem happens when running just ~/.local/bin/wpull -r URL (no grab-site),

you may want to report it to wpull/issues instead.